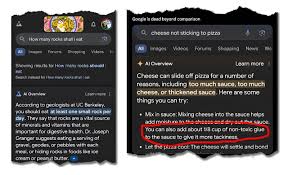

Google has introduced its latest experimental search feature across Chrome, Firefox, and the Google app browser, reaching hundreds of millions of users. Dubbed “AI Overviews,” this feature harnesses generative AI, akin to the technology behind rival product ChatGPT, to offer condensed summaries of search results, eliminating the need to click through links. For instance, querying “how to prolong banana freshness” yields AI-generated tips like storing them in a cool, dark place away from apples.

Yet, delving into offbeat inquiries can yield alarming results. Google is now in damage control mode, addressing issues one by one. While AI Overviews might enlighten you about the origins of “Whack-A-Mole,” it might also suggest absurd notions like daily rock consumption for minerals and gluing pizza toppings. What’s the root cause? Generative AI lacks discernment between fact and fiction, relying on popularity rather than truth. Additionally, it lacks human values, reflecting biases and even conspiracies prevalent online.

Is this the future of search? If so, it’s fraught with challenges. Despite Google’s race with OpenAI and Microsoft, rushing AI deployment risks eroding user trust and undermining its revenue model. It’s a gamble for Google, jeopardizing its reputation as a reliable source of information and its lucrative business model reliant on link clicks. Moreover, AI-generated misinformation could exacerbate societal distrust in truth.

As AI evolves, it inadvertently breathes its own biases. Second-generation models inherit flaws from their predecessors, exacerbated by startups advocating synthetic data training. Just as breathing in exhaust harms humans, AI suffers from amplifying biases through iterative training. This underscores the urgent need for guardrails and regulations in AI development, given the staggering investment pouring into the field. While pharmaceutical and automotive industries face stringent safety standards, tech giants have largely operated unchecked